26 Jul FraudGPT is another AI-centric threat model to remain aware of

The dangers of FraudGPT and malicious AI markets

With the popularity of ChatGPT, numerous lookalikes have followed to hopefully gain a foothold in the emerging market of “AI” services. These days, it’s common to see big tech and similar enterprises roll out their own variation of AI or ChatGPT. But as this tech continues to find its place in the world, it isn’t without problems. In fact, one of the biggest concerns involves automated attacks and AI-generated malware.

While models like ChatGPT were quick to establish rulesets to shut down attempts to prompt malware code and malicious scripts, the hacker world has tirelessly attempted to develop its own AI for malware. This is not surprising. Before the emergence of AI and code prompts, dark web markets existed (and still do) for various services. For example, threat actors can purchase RaaS kits (ransomware as a service) with baked-in target lists. It allows them to expedite attacks and launch complex threat campaigns, even if they have limited knowledge of their targets and computer systems.

Now, AI is entering the malicious market with no sign of slowing down. Two AI models have already emerged: WormGPT, and quickly behind it, FraudGPT.

FraudGPT is already selling its services on specific dark forums and Telegram links.

Capabilities of a malicious AI model

The goal of FraudGPT is to further automate and expedite attack chains, generating spear phishing emails, software cracks, and malicious code. Users can theoretically prompt the engine for a specific kind of malicious code or request. FraudGPT first emerged in circulation late July 2023, charging a monthly subscription cost. Subscribers pay $200 monthly, $1000 for 6 months, or $1700 for a full year. This is not unlike mainstream subscription packages for other software services, demonstrating the alarming trend of turning malware into a service option.

FraudGPT’s creator claims the prompt model also has no limits, whereas ChatGPT and similar AI-generation software red flag certain requests, prompts, and words. At least 3000 confirmed sales exist, marketed towards “fraudsters and hackers.”

The creator “CanadianKingpin” claims the software is capable of producing malware that can’t be detected, can discover vulnerabilities in networks, and will produce lists of leaked networks if available. When AI models are generated, they use LLM, or “large language models,” but the LLM used to design FraudGPT is not yet known. Identifying the LLM will help cybersecurity teams and agencies develop ways to disable the AI model or create defenses against it.

From phishing to AI

Typically, the nomenclature and complexity of cyberattacks meant only select groups could launch large-scale attacks. Business email compromise attacks or malware campaigns were typically handled by those with expertise and veterancy in IT.

Now, however, with these service kits and malicious AI models like FraudGPT and WormGPT, even novice actors can equip themselves with exceptionally dangerous tools.

The true and most alarming trend is, however, the boldness of the owner and the availability of malicious services found online if they search in the right places.

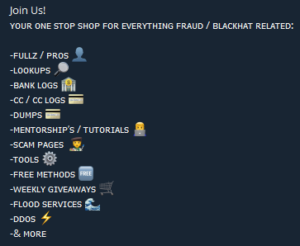

For example, outside of FraudGPT, there are service offerings as you would find anywhere else:

The boldness of these claims and advertising is a result of escaping any form of penalty with an expanding market of eager purchasers.

Monitoring for attacks

Speed is everything. Defending networks, personal resources, and agencies from AI-generated strikes relies on advanced telemetry and automated flagging systems. These flags should report unusual activity in order to halt any data theft or exfiltration. Most attackers will use their campaigns in an attempt to steal valuable credentials, then funneled into phishing emails or fraudulent wires.

SMBs and enterprises unsure of their cybersecurity posture should also consider investing in backup resources, cloud storage, and MSP support.

Share this post:

Sorry, the comment form is closed at this time.